Note: Read Custom Robots before continuing this article.

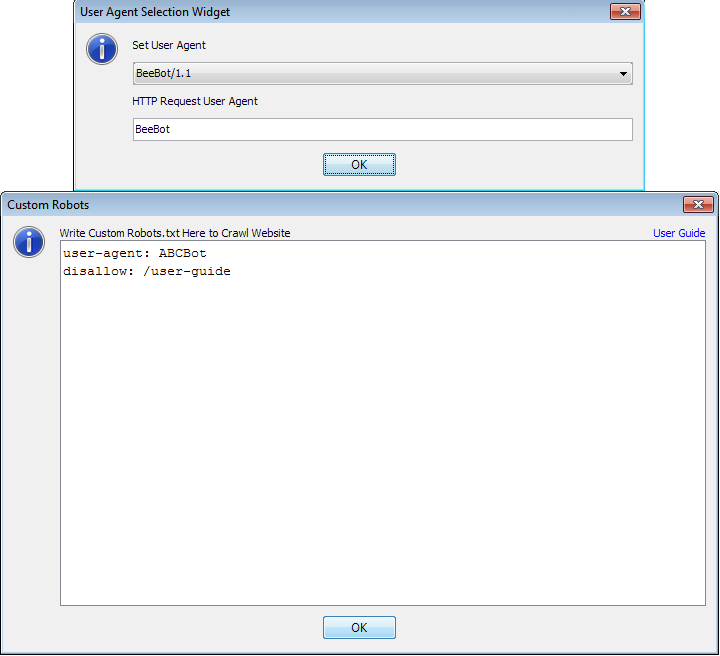

‘Custom robots’ is a feature to crawl website with editable robots.txt to understand the behavior of actual robots.txt. If ‘Custom Robots’ is enabled; the BeeBot will crawl the website as per the directives stated in custom robots. But this behavior can be different with different user agents. For instance if the selected user agent from user ‘agent section’ under ‘spider’ menu is BeeBot and in custom robots the directives are for GoogleBot then crawler will bot read any robots. See the below images for better understandings.

In above snapshot the selected user agent is ‘BeeBot’ and in ‘custom robots’ is defined for ‘ABCBot’. In this case no robots will be followed.

[the_ad id=”6392″]

Different Cases with Custom Robots:

Case 1:

User agent Selected = *;

User Agent in Custom Robots = any string

Result:

As ‘*’ is a wild card operator; defined custom robots will be followed in any case.

Case 2:

User Agent Selected = BeeBot

Custom Robots

User-agent: Some-Bot

Disallow: /user-guide

User-Agent: Some-Other-Bot

Disallow: /some-other-user-guide

User-Agent: *

Disallow: /no-directory

Result:

As the selected user agent ‘BeeBot’ do not match with first and second section of robots; thus last section will be followed for the website i.e.

[the_ad id=”6396″]

User-Agent: *

Disallow: /no-directory

Other Resources |

|

|---|---|